Product

·

Published

16 Jan 26

Continuous discovery is a lie (that 62% of teams tell themselves)

How Customer Intelligence platforms replace quarterly research cycles with always-on discovery that works at product velocity

Table of content

The customer intelligence OS for modern product companies.

Your roadmap deck says "continuous discovery." Your team principles mention "always be learning." Your job description promised "research-driven decision making."

Here's what actually happens.

You run discovery sprints twice a year. You recruit users six weeks before you need them. You synthesize findings three weeks after interviews. By the time insights reach your roadmap, the market moved and your assumptions are stale.

Only 38% of product teams actually conduct continuous research. The other 62% know they should be doing it. They just can't figure out how to make it work operationally.

.png)

The problem: continuous discovery fails on operations, not principle

Every product team agrees continuous discovery is valuable. The breakdown isn't philosophical. It's operational.

Recruiting partecipants takes 6-8 weeks in B2B. You need to get past gatekeepers. Navigate legal reviews. Coordinate calendars. Get budget approval. Run the research. Synthesize findings.

By the time you have insights, the question changed.

Research teams are bottlenecked. 97% of researchers experience recruitment challenges. 70% struggle finding matching participants. 45% face slow recruitment. 41% deal with no-shows.

Even with dedicated researchers, they can only run so many studies. The human capacity constraint means continuous discovery becomes quarterly discovery at best.

Feedback loops are too slow. You launch a feature. Users experience it. Some love it, some hate it, most are confused by one specific thing.

But you won't know for weeks.

By the time you recruit, interview, and synthesize, you've committed to your next sprint. The insights arrive too late to influence decisions being made right now.

Continuous discovery requires continuous operations. But research operations are designed for periodic, planned studies. You're trying to run a 24/7 process with infrastructure built for quarterly projects.

And you're missing the conversations already happening. Users tell you what they need in support tickets, sales calls, onboarding emails, and community forums. This feedback exists, but it's scattered, slow to surface, and disconnected from your research process. By the time patterns emerge, you've already shipped the next sprint.

The Propane solution: AI research agents that work at product velocity

Teams are experimenting with AI for research. Some use ChatGPT to analyze feedback manually. Others build custom agents with Claude or GPT-4 to interview users. A few connect AI tools to their product with APIs and prompts.

These DIY approaches work if you have the time and technical chops to maintain them. But they're single-player setups that break when that person leaves. And they still require you to orchestrate everything: triggering interviews, managing conversations, synthesizing responses.

What Propane offers: Autonomous research agents that work at product velocity without the DIY overhead.

Propane embeds research agents directly in your product. These agents conduct contextual interviews at key moments without human scheduling, recruitment, or coordination.

When a user completes their first workflow, the agent asks about their experience. When someone hits a limitation, the agent understands what they were trying to accomplish. When a feature goes unused, the agent discovers why.

Research stops being scheduled events. It becomes always-on intelligence that works while you sleep.

How it works

You define what you need to learn. "Understand why users abandon onboarding" or "Discover what jobs users hire this feature to accomplish."

Propane agents embed at the moments that matter. After onboarding. When users hit limits. Before churn signals emerge.

The agents conduct conversational interviews autonomously. They adapt based on what users say. Not rigid surveys, actual conversations.

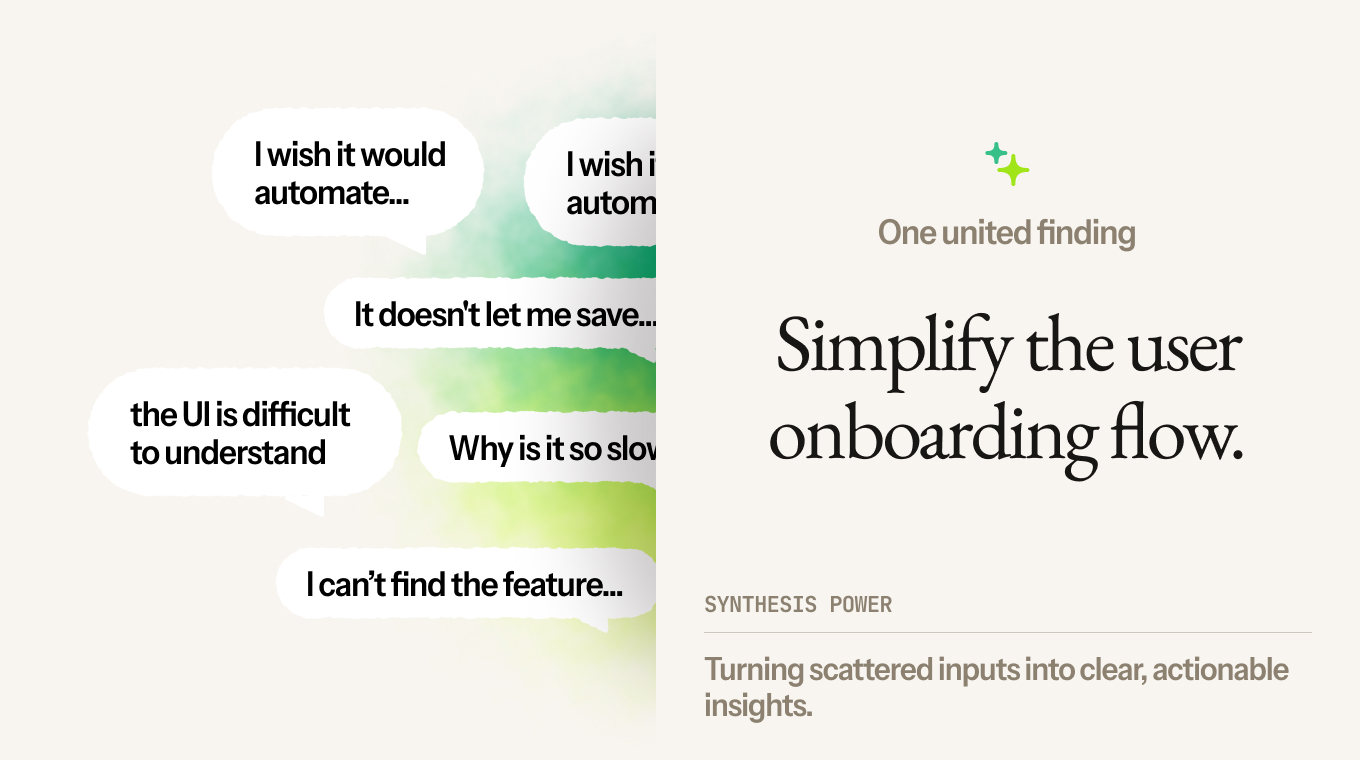

Insights synthesize automatically into clear signals. You see patterns across hundreds of conversations. "47 users describe the same workflow problem using different words."

These signals feed directly into your workflow with actionable insights:

Slack notifications when patterns emerge: "67% of enterprise users (23 conversations) mention SSO in onboarding. Pattern detected across 3 segments. Recommended action: Prioritize SSO for Q2."

Jira tickets with research backing: "Feature Request: Bulk export functionality. Mentioned by 34 users in past 30 days. Correlated with 78% higher retention in power user segment. 12 direct quotes attached."

Roadmap intelligence showing impact: "Onboarding step 3 confuses 89 users across mid-market segment. 47% abandon at this step. Top blocker to activation. Priority: High."

Not vague feedback summaries. Clear signals showing what users need, how many are affected, which segments matter, and what to do about it.

What happens when discovery is actually continuous

Organizations with research embedded in strategy report 2.7x better outcomes. They see 3.6x more active users and 2.8x increased revenue compared to teams doing periodic research.

The difference isn't the research methods. It's the velocity.

Product teams must adapt or fall behind

A B2B platform ran discovery every 6 months because that's when they could get research resources. By the time insights made it into sprint planning, they were 3/4 months stale.

They couldn't answer basic questions in real-time. "Why are users dropping off at step 3?" "Is this feature solving the job we thought it would?" Questions came up every sprint. Answers came twice a year.

They implemented Propane research agents. Agents conducted 340 contextual interviews in the first month.

What they discovered: Step 3 of onboarding wasn't confusing because of UX. It was confusing because 67% of users didn't understand why they needed to complete it. The value prop wasn't landing. This only became visible when you talked to users right after they experienced it.

An enterprise feature looked successful in usage metrics but users described it as "painful." They were completing the workflow because they had to, not because it worked well. Quantitative data said ship it. Qualitative intelligence said fix it.

89 users mentioned the same workflow limitation using completely different language. The pattern was invisible in feature requests because users didn't know what to ask for.

The shift:

PMs stopped waiting for quarterly research to answer sprint-level questions. When engineering asked "should we prioritize X or Y," the answer came from recent user conversations, not 4-month-old studies.

Roadmap planning changed from "what should we build" debates to "here's what users need based on 200+ conversations this quarter."

Why AI agents work when surveys don't

Surveys tell you what users say they want in abstract scenarios. Contextual interviews reveal what users actually need when they're experiencing the problem.

Periodic research creates snapshots. Continuous discovery shows you how needs evolve and catches emerging patterns before they become obvious.

Traditional research requires human capacity that doesn't scale. AI agents operate 24/7 and interview unlimited users without recruitment bottlenecks.

The goal isn't to eliminate research teams. It's to free them from operational constraints. AI agents handle the operational scale. Human researchers focus on work that requires expertise: investigating complex problems, validating surprising findings, translating insights into strategy.

80% of research professionals now use AI in their workflow. The ones succeeding aren't using it to replace research. They're using it to conduct research at the velocity modern product development requires.

Getting started

Day one: Connect Propane to your product. Define what you need to learn. "Why do users abandon onboarding?" "What jobs are users hiring this feature for?"

Day three: Agents are embedded at key product moments. After onboarding. When users hit limits. Before churn signals emerge.You can tailor agents to each scenario. Customize them by persona, market segment, or user journey stage. Different questions for enterprise users versus SMB. Different moments for power users versus casual users.

30 days later: You have a constant flow of intelligence. Not quarterly snapshots. Continuous signal from ongoing conversations.All the data comes into the same repository. Patterns emerge across hundreds of conversations.

The insights feed directly into your workflow.

Want to see how Propane makes continuous discovery operationally possible? Book a demo.

Continuous discovery requires continuous operations. AI agents make it possible.